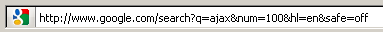

That’s right… today Matt Cutts completely reversed his opinion on pages indexed in Google that are nothing more than copies of auto-generated snippets.

Back in March of 2007, Matt discussed search results within search results, and Google’s dislike for them:

In general, we’ve seen that users usually don’t want to see search results (or copies of websites via proxies) in their search results. Proxied copies of websites and search results that don’t add much value already fall under our quality guidelines (e.g. “Don’t create multiple pages, subdomains, or domains with substantially duplicate content.” and “Avoid “doorway” pages created just for search engines, or other “cookie cutter” approaches…”), so Google does take action to reduce the impact of those pages in our index.

But just to close the loop on the original question on that thread and clarify that Google reserves the right to reduce the impact of search results and proxied copies of web sites on users, Vanessa also had someone add a line to the quality guidelines page. The new webmaster guideline that you’ll see on that page says “Use robots.txt to prevent crawling of search results pages or other auto-generated pages that don’t add much value for users coming from search engines.” – Matt Cutts

Now, while the Google Webmaster Guidelines still specifically instruct webmasters to

Read more

Adding images to your blog posts can make them much more visually appealing to your readers. This in turn can increase the likelihood that someone will link to that post or subscribe to your feed, which will of course in the long run help to improve your rankings and traffic. The internet is chock full of images, many of which will fit perfectly with that blog post or article that you are writing. The problem is, however, finding images that are both high quality and that you are actually allowed to use.

Adding images to your blog posts can make them much more visually appealing to your readers. This in turn can increase the likelihood that someone will link to that post or subscribe to your feed, which will of course in the long run help to improve your rankings and traffic. The internet is chock full of images, many of which will fit perfectly with that blog post or article that you are writing. The problem is, however, finding images that are both high quality and that you are actually allowed to use. Wednesday

Wednesday  Over the past couple of weeks, one of the biggest concerns about SEOmoz’s new Linkscape tool (which I recently blogged about in reference to the

Over the past couple of weeks, one of the biggest concerns about SEOmoz’s new Linkscape tool (which I recently blogged about in reference to the  Donna is definitely one of my bestest friends. She gets me, we think alike, and when I get stuck on an issue she’s always there to help me, even if it’s just moral support (although usually it’s in the form of information I need when my brain is just plain overloaded). I love her to death. Thing is, Donna is from Louisiana, and they don’t always do things in those parts in a way that I would call, um… normal.

Donna is definitely one of my bestest friends. She gets me, we think alike, and when I get stuck on an issue she’s always there to help me, even if it’s just moral support (although usually it’s in the form of information I need when my brain is just plain overloaded). I love her to death. Thing is, Donna is from Louisiana, and they don’t always do things in those parts in a way that I would call, um… normal.  Ok, so, looks like Rand and gang finally decided to reveal their top-secret recipe about how they gathered all that information on everybody’s websites without anyone noticing what they were doing. There was

Ok, so, looks like Rand and gang finally decided to reveal their top-secret recipe about how they gathered all that information on everybody’s websites without anyone noticing what they were doing. There was